-

Quick start

-

API

-

-

-

-

-

-

- Dense

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- AdditiveAttention

- Attention

- MutiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- Embedding

- BatchNormalization

- LayerNormalization

- Bidirectional

- GRU

- LSTM

- SimpleRNN

- Show All Articles ( 12 ) Collapse Articles

-

- Dense

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- Embedding

- BatchNormalization

- LayerNormalization

- Bidirectional

- GRU

- LSTM

- SimpleRNN

- Show All Articles ( 12 ) Collapse Articles

-

-

- Dense

- AdditiveAttention

- Attention

- MultiHeadAttention

- BatchNormalization

- LayerNormalization

- Bidirectional

- GRU

- LSTM

- SimpleRNN

- Conv1D

- Conv2D

- Conv3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- Embedding

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Show All Articles ( 12 ) Collapse Articles

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles ( 15 ) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles ( 15 ) Collapse Articles

-

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles ( 15 ) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles ( 15 ) Collapse Articles

-

-

-

-

- Add

- AdditiveAttention

- AlphaDropout

- Attention

- Average

- AvgPool1D

- AvgPool2D

- AvgPool3D

- BatchNormalization

- Bidirectional

- Concatenate

- Conv1D

- Conv1DTranspose

- Conv2D

- Conv2DTranspose

- Conv3D

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Cropping1D

- Cropping2D

- Cropping3D

- Dense

- DepthwiseConv2D

- Dropout

- Embedding

- Flatten

- GaussianDropout

- GaussianNoise

- GlobalAvgPool1D

- GlobalAvgPool2D

- GlobalAvgPool3D

- GlobalMaxPool1D

- GlobalMaxPool2D

- GlobalMaxPool3D

- GRU

- Input

- LayerNormalization

- LSTM

- MaxPool1D

- MaxPool2D

- MaxPool3D

- MultiHeadAttention

- Multiply

- Permute3D

- Reshape

- RNN

- SeparableConv1D

- SeparableConv2D

- SimpleRNN

- SpatialDropout

- Substract

- TimeDistributed

- UpSampling1D

- UpSampling2D

- UpSampling3D

- ZeroPadding1D

- ZeroPadding2D

- ZeroPadding3D

- Show All Articles ( 45 ) Collapse Articles

-

- AlphaDropout

- AvgPool1D

- AvgPool2D

- AvgPool3D

- BatchNormalization

- Bidirectional

- Conv1D

- Conv1DTranspose

- Conv2D

- Conv2DTranspose

- Conv3D

- Conv3DTranspose

- Cropping1D

- Cropping2D

- Cropping3D

- Dense

- DepthwiseConv2D

- Dropout

- Embedding

- Flatten

- GaussianDropout

- GaussianNoise

- GlobalAvgPool1D

- GlobalAvgPool2D

- GlobalAvgPool3D

- GlobalMaxPool1D

- GlobalMaxPool2D

- GlobalMaxPool3D

- GRU

- LayerNormalization

- LSTM

- MaxPool1D

- MaxPool2D

- MaxPool3D

- Permute3D

- Reshape

- RNN

- SeparableConv1D

- SeparableConv2D

- SimpleRNN

- SpatialDropout

- UpSampling1D

- UpSampling2D

- UpSampling3D

- ZeroPadding1D

- ZeroPadding2D

- ZeroPadding3D

- Show All Articles ( 32 ) Collapse Articles

-

-

-

- Resume

- Accuracy

- BinaryAccuracy

- BinaryCrossentropy

- BinaryIoU

- CategoricalAccuracy

- CategoricalCrossentropy

- CategoricalHinge

- CosineSimilarity

- FalseNegatives

- FalsePositives

- Hinge

- Huber

- IoU

- KLDivergence

- LogCoshError

- Mean

- MeanAbsoluteError

- MeanAbsolutePercentageError

- MeanIoU

- MeanRelativeError

- MeanSquaredError

- MeanSquaredLogarithmicError

- MeanTensor

- OneHotIoU

- OneHotMeanIoU

- Poisson

- Precision

- PrecisionAtRecall

- Recall

- RecallAtPrecision

- RootMeanSquaredError

- SensitivityAtSpecificity

- SparseCategoricalAccuracy

- SparseCategoricalCrossentropy

- SparseTopKCategoricalAccuracy

- Specificity

- SpecificityAtSensitivity

- SquaredHinge

- Sum

- TopKCategoricalAccuracy

- TrueNegatives

- TruePositives

- Show All Articles ( 28 ) Collapse Articles

-

- Resume

- Constant

- GlorotNormal

- GlorotUniform

- HeNormal

- HeUniform

- Identity

- LecunNormal

- LecunUniform

- Ones

- Orthogonal

- RandomNormal

- RandomUnifom

- TruncatedNormal

- VarianceScaling

- Zeros

- Show All Articles ( 1 ) Collapse Articles

-

Get all loss type

Description

Gets the information related to the loss of each layer contained in the model.

Input parameters

![]() Model in : model architecture.

Model in : model architecture.

Output parameters

![]() Model out : model architecture.

Model out : model architecture.

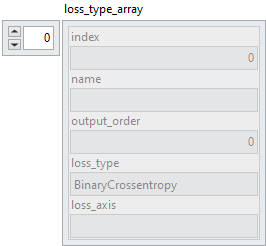

![]() index : integer, index of layer.

index : integer, index of layer.

![]() name : string, name of layer.

name : string, name of layer.

![]() output_order : integer, output number.

output_order : integer, output number.

![]() loss_type : enum, name of the losse used by the layer.

loss_type : enum, name of the losse used by the layer.

![]() loss_axis : string, the axis on which the losse performs its calculation.

loss_axis : string, the axis on which the losse performs its calculation.

Example

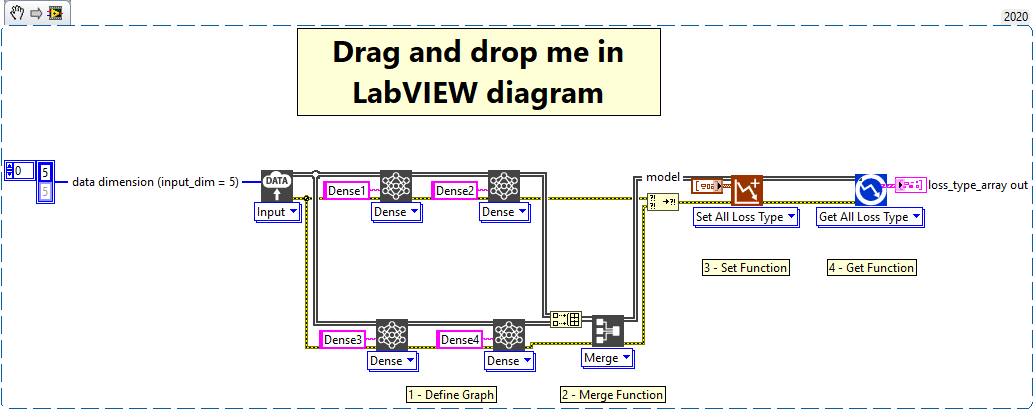

All these exemples are snippets PNG, you can drop these Snippet onto the block diagram and get the depicted code added to your VI (Do not forget to install HAIBAL library to run it).

Using the “Get All Loss Type” function

1 – Define Graph

We define two graphs with one input and two dense layers. In the first graph the dense layers are named Dense1 and Dense2. In the second one we named the layers Dense3 and Dense4.

2 – Merge Function

We use the “Merge” function to merge the two graphs.

3 – Set Function

The “Set All Loss Type” function is used to define the loss to be applied to each graph. For the first graph we will apply the “MeanSquare” loss on axis 1 and for the second we will apply the “BinaryCrossentropy” loss on axis 0.

2 – Get Function

We use the function “Get All Loss Type” to get all the loss applied to the model.