-

Quick start

-

API

-

-

- Resume

- Add

- AdditiveAttention

- AlphaDropout

- Attention

- Average

- AvgPool1D

- AvgPool2D

- AvgPool3D

- BatchNormalization

- Bidirectional

- Concatenate

- Conv1D

- Conv1DTranspose

- Conv2D

- Conv2DTranspose

- Conv3D

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Cropping1D

- Cropping2D

- Cropping3D

- Dense

- DepthwiseConv2D

- Dropout

- Embedding

- Flatten

- GaussianDropout

- GaussianNoise

- GlobalAvgPool1D

- GlobalAvgPool2D

- GlobalAvgPool3D

- GlobalMaxPool1D

- GlobalMaxPool2D

- GlobalMaxPool3D

- GRU

- Input

- LayerNormalization

- LSTM

- MaxPool1D

- MaxPool2D

- MaxPool3D

- MultiHeadAttention

- Multiply

- Output Predict

- Output Train

- Permute3D

- Reshape

- RNN

- SeparableConv1D

- SeparableConv2D

- SimpleRNN

- SpatialDropout

- Split

- Substract

- UpSampling1D

- UpSampling2D

- UpSampling3D

- ZeroPadding1D

- ZeroPadding2D

- ZeroPadding3D

- Show All Articles ( 48 ) Collapse Articles

-

-

-

-

-

- Resume

- Constant

- GlorotNormal

- GlorotUniform

- HeNormal

- HeUniform

- Identity

- LecunNormal

- LecunUniform

- Ones

- Orthogonal

- RandomNormal

- RandomUnifom

- TruncatedNormal

- VarianceScaling

- Zeros

- Show All Articles ( 1 ) Collapse Articles

-

-

-

-

-

-

-

- Dense

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- AdditiveAttention

- Attention

- MutiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- Embedding

- BatchNormalization

- LayerNormalization

- Bidirectional

- GRU

- LSTM

- SimpleRNN

- Show All Articles ( 12 ) Collapse Articles

-

- Dense

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- Embedding

- BatchNormalization

- LayerNormalization

- Bidirectional

- GRU

- LSTM

- SimpleRNN

- Show All Articles ( 12 ) Collapse Articles

-

-

- Dense

- AdditiveAttention

- Attention

- MultiHeadAttention

- BatchNormalization

- LayerNormalization

- Bidirectional

- GRU

- LSTM

- SimpleRNN

- Conv1D

- Conv2D

- Conv3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- Embedding

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Show All Articles ( 12 ) Collapse Articles

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles ( 15 ) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles ( 15 ) Collapse Articles

-

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles ( 15 ) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles ( 15 ) Collapse Articles

-

-

-

- Resume

- Accuracy

- BinaryAccuracy

- BinaryCrossentropy

- BinaryIoU

- CategoricalAccuracy

- CategoricalCrossentropy

- CategoricalHinge

- CosineSimilarity

- FalseNegatives

- FalsePositives

- Hinge

- Huber

- IoU

- KLDivergence

- LogCoshError

- Mean

- MeanAbsoluteError

- MeanAbsolutePercentageError

- MeanIoU

- MeanRelativeError

- MeanSquaredError

- MeanSquaredLogarithmicError

- MeanTensor

- OneHotIoU

- OneHotMeanIoU

- Poisson

- Precision

- PrecisionAtRecall

- Recall

- RecallAtPrecision

- RootMeanSquaredError

- SensitivityAtSpecificity

- SparseCategoricalAccuracy

- SparseCategoricalCrossentropy

- SparseTopKCategoricalAccuracy

- Specificity

- SpecificityAtSensitivity

- SquaredHinge

- Sum

- TopKCategoricalAccuracy

- TrueNegatives

- TruePositives

- Show All Articles ( 28 ) Collapse Articles

-

-

Summary

Description

Returns the summary of the model. Possibility to retrieve a cluster or a text with the information and save it in a file. This information consists of the successors, predecessors, the output shape, the layer and its name.

Input parameters

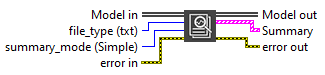

![]() Model in : model architecture.

Model in : model architecture.![]() file_type : enum, type of the file on which the summary is written.

file_type : enum, type of the file on which the summary is written.

-

- None : returns the summary only in a cluster array.

- txt : returns the summary in a text file and cluster array. (default)

- csv : returns the summary in a comma-separated values (csv) file and cluster array.

![]() summary_mode : enum, display mode of the summary.

summary_mode : enum, display mode of the summary.

-

- Simple : displays simple information (index, layer, input shape, output shape, predecessors, successors, parameters).

- Advanced : displays advanced information (index, layer, input shape, output shape, predecessors, successors, parameters, data format, init weight, weights shape).

- Complete : displays complete information (index, layer, input shape, output shape, predecessors, successors, parameters, data format, init weight, weights shape, boolean “training?”, boolean “update?”, boolean “store?”, loss derivative attenuation).

Output parameters

![]() Model out : model architecture.

Model out : model architecture.

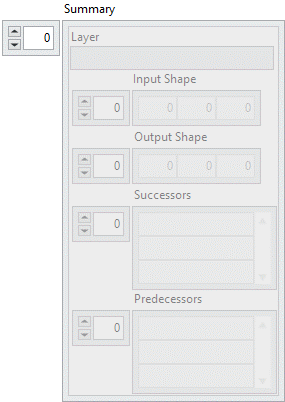

![]() Summary : array

Summary : array

![]() Layer : string, type and name of layer.

Layer : string, type and name of layer.![]() Input Shape : integer array, input size of the layer.

Input Shape : integer array, input size of the layer.![]() Output Shape : integer array, output size of the layer.

Output Shape : integer array, output size of the layer.![]() Successors : string array, the layer(s) connected to the output of the current layer.

Successors : string array, the layer(s) connected to the output of the current layer.![]() Predecessors : string array, the layer(s) connected to the input of the current layer.

Predecessors : string array, the layer(s) connected to the input of the current layer.

![]() Text Summary : string,

Text Summary : string,

Example

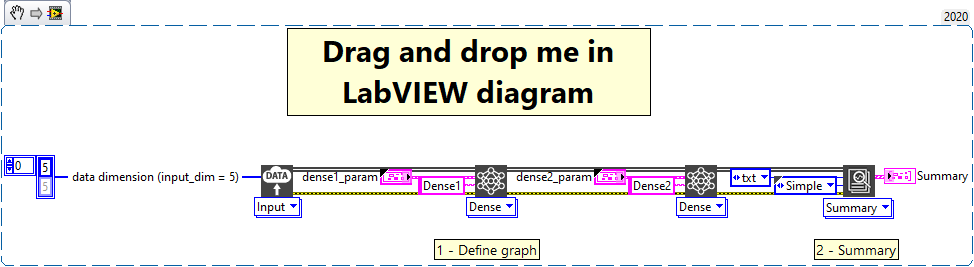

All these exemples are snippets PNG, you can drop these Snippet onto the block diagram and get the depicted code added to your VI (Do not forget to install HAIBAL library to run it).

Simple summary of the model in a text file

1 – Define Graph

We define the graph with one input and two Dense layers named Dense1 and Dense2.

2 – Summary

We use the “Summary” function to have the model information in a text file.