algorithm

![]() algorithm : enum, (name of optimizer) for optimizer instance.

algorithm : enum, (name of optimizer) for optimizer instance.

Default value “adam”.

Adadelta

Adadelta scales the learning rate based on the historical gradient while only taking into account the recent time window and not the entire history, like AdaGrad. Also uses a component that serves as an acceleration term, which accumulates historical updates (similar to momentum).

![]() are accumulated gradients.

are accumulated gradients.![]() are accumulated updates.

are accumulated updates.![]() are a decay constant.

are a decay constant.![]() are gradients.

are gradients.![]() are learning rate.

are learning rate.![]() are numerical stability (e-7).

are numerical stability (e-7).![]() are rescaled gradients.

are rescaled gradients.![]() are weight.

are weight.

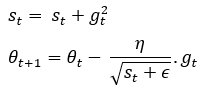

Adagrad

Adagrad is an optimizer with parameter-specific learning rates, which are adapted relative to how frequently a parameter gets updated during training. The more updates a parameter receives, the smaller the updates.

![]() are momentum.

are momentum.![]() are gradients of the parameters we want to update.

are gradients of the parameters we want to update.![]() are learning rate.

are learning rate.![]() are smoothing term (avoids division by zero).

are smoothing term (avoids division by zero).![]() are weight.

are weight.

Adam

Adam optimization is a stochastic gradient descent method that is based on adaptive estimation of first-order and second-order moments.

![]() and

and ![]()

![]() and

and ![]() are bias-corrected first and second moment estimates.

are bias-corrected first and second moment estimates.![]() and

and ![]() are momentum coefficient.

are momentum coefficient.![]() are gradients of the parameters we want to update.

are gradients of the parameters we want to update.![]() are learning rate.

are learning rate.![]() are smoothing term (avoids division by zero).

are smoothing term (avoids division by zero).![]() are weight.

are weight.

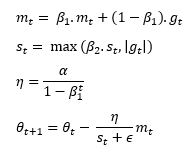

Adamax

AdaMax algorithm is an extension to the Adaptive Movement Estimation (Adam) Optimization algorithm. More broadly, is an extension to the Gradient Descent Optimization algorithm. Adam can be understood as updating weights inversely proportional to the scaled L2 norm (squared) of past gradients. AdaMax extends this to the so-called infinite norm (max) of past gradients.

![]() and

and ![]()

![]() and

and ![]() are momentum coefficient.

are momentum coefficient.![]() are gradients of the parameters we want to update.

are gradients of the parameters we want to update.![]() are learning rate.

are learning rate.![]() are updated learning rate.

are updated learning rate.![]() are smoothing term (avoids division by zero).

are smoothing term (avoids division by zero).![]() are weight.

are weight.

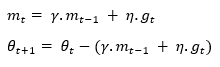

Inertia

![]() are momentum

are momentum![]() are momentum coefficient.

are momentum coefficient.![]() are gradients of the parameters we want to update.

are gradients of the parameters we want to update.![]() are learning rate.

are learning rate.![]() are weight.

are weight.

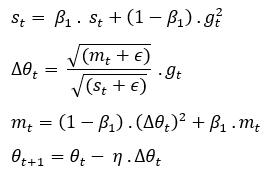

NAdam

Much like Adam is essentially RMSprop with momentum, Nadam is Adam with Nesterov momentum.

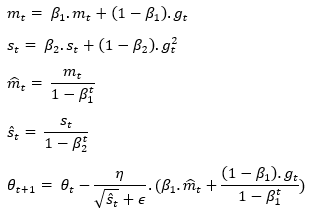

![]() and

and ![]()

![]() and

and ![]() are bias-corrected first and second moment estimates.

are bias-corrected first and second moment estimates.![]() and

and ![]() are momentum coefficient.

are momentum coefficient.![]() are gradients of the parameters we want to update.

are gradients of the parameters we want to update.![]() are learning rate.

are learning rate.![]() are smoothing term (avoids division by zero).

are smoothing term (avoids division by zero).![]() are weight.

are weight.

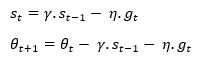

Nesterov

Nesterov momentum is an extension of momentum that involves calculating the decaying moving average of the gradients of projected positions in the search space rather than the actual positions themselves.

![]() are momentum

are momentum![]() are momentum coefficient.

are momentum coefficient.![]() are gradients of the parameters we want to update.

are gradients of the parameters we want to update.![]() are learning rate.

are learning rate.![]() are weight.

are weight.

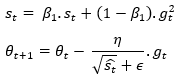

RMSprop

The gist of RMSprop is to:

- Maintain a moving (discounted) average of the square of gradients

- Divide the gradient by the root of this average

This implementation of RMSprop uses plain momentum, not Nesterov momentum.

The centered version additionally maintains a moving average of the gradients, and uses that average to estimate the variance.

![]()

![]() are momentum coefficient.

are momentum coefficient.![]() are gradients of the parameters we want to update.

are gradients of the parameters we want to update.![]() are learning rate.

are learning rate.![]() are smoothing term (avoids division by zero).

are smoothing term (avoids division by zero).![]() are weight.

are weight.

SGD

This parameter is used in add_to_graph an define VIs of the AdditiveAttention, Attention, BatchNormalization, Conv1D, Conv1DTranspose, Conv2D, Conv2DTranspose, Conv3D, Conv3DTranspose, Dense, DepthwiseConv2D, Embedding, GRU, LayerNormalization, LSTM, MultiHeadAttention, SeparableConv1D, SeparableConv2D, SimpleRNN, PReLU, ConvLSTM1DCell, ConvLSTM2DCell, ConvLSTM3DCell, GRUCell, LSTMCell, SimpleRNNCell layers.