MultiHeadAttention

Description

Returns the MultiHeadAttention layer weights. Type : polymorphic.

![]()

Input parameters

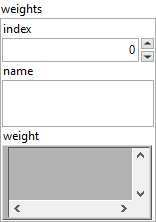

![]() weights : cluster

weights : cluster

![]() index : integer, index of layer.

index : integer, index of layer.![]() name : string, name of layer.

name : string, name of layer.![]() weight : variant, weight of layer.

weight : variant, weight of layer.

Output parameters

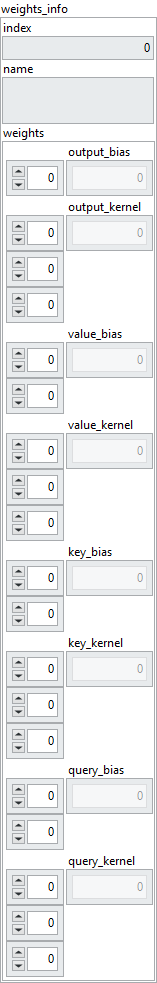

![]() weights_info : cluster

weights_info : cluster

![]() index : integer, index of layer.

index : integer, index of layer.![]() name : string, name of layer.

name : string, name of layer.![]() weights : cluster

weights : cluster

![]() output_bias : array, 1D values. output_bias = query[2].

output_bias : array, 1D values. output_bias = query[2].![]() output_kernel : array, 3D values. output_kernel = [num_heads, value_dim, query[2]].

output_kernel : array, 3D values. output_kernel = [num_heads, value_dim, query[2]].![]() value_bias : array, 2D values. value_bias = [num_heads, value_dim].

value_bias : array, 2D values. value_bias = [num_heads, value_dim].![]() value_kernel : array, 3D values. value_kernel = [value[2], num_heads, value_dim].

value_kernel : array, 3D values. value_kernel = [value[2], num_heads, value_dim].![]() key_bias : array, 2D values. key_bias = [num_heads, key_dim].

key_bias : array, 2D values. key_bias = [num_heads, key_dim].![]() key_kernel : array, 3D values. key_kernel = [key[2], num_heads, key_dim].

key_kernel : array, 3D values. key_kernel = [key[2], num_heads, key_dim].![]() query_bias : array, 2D values. query_bias = [num_heads, key_dim].

query_bias : array, 2D values. query_bias = [num_heads, key_dim].![]() query_kernel : array, 3D values. query_kernel = [query[2], num_heads, key_dim].

query_kernel : array, 3D values. query_kernel = [query[2], num_heads, key_dim].

Dimension

- output_bias = query[2]

The size of output_bias depends on the query input size of the MultiHeadAttention layer. It will take the value at index 2 of the query size.

For example, if query has a size of [batch_size = 5, Tq = 3, dim = 2] then the size of output_bias is [dim = 2].

Another example, if query has a size of [batch_size = 10, Tq = 9, dim = 5] then the size of output_bias is [dim = 5].

- output_kernel = [num_heads, value_dim, query[2]]

The size of output_kernel depends on the query input size, the num_heads parameter and the value_dim parameter of the MultiHeadAttention layer. For the input size of query it will take the value at index 2.

For example, if query has a size of [batch_size = 5, Tq = 3, dim = 2], num_heads a value of 8 and value_dim a value of 5 then the output_kernel size is [num_heads = 8, value_dim = 5, dim = 2].

Another example, if query has a size of [batch_size = 10, Tq = 9, dim = 4], num_heads a value of 6 and value_dim a value of 5 then the output_kernel size is [num_heads = 6, value_dim = 5, dim = 4].

- value_bias = [num_heads, value_dim]

The size of value_bias depends on the num_heads parameter and the value_dim parameter of the MultiHeadAttention layer. For example, if num_heads has a value of 8 and value_dim a value of 5 then the size of value_bias is [8, 5]. Another example, if num_heads has a value of 6 and value_dim a value of 4 then the size of value_bias is [6, 4].

- value_kernel = [value[2], num_heads, value_dim]

The size of value_kernel depends on the input size of value, the num_heads parameter and the value_dim parameter of the MultiHeadAttention layer. For the input size of value, it will take the value at index 2.

For example, if value has a size of [batch_size = 5, Tv = 3, dim = 2], num_heads a value of 8 and value_dim a value of 5, then the size of value_kernel is [dim = 2, num_heads = 8, value_dim = 5].

Another example, if value has a size of [batch_size = 10, Tv = 9, dim = 4], num_heads a value of 6 and value_dim a value of 5, then the size of value_kernel is [dim = 4, num_heads = 6, value_dim = 5].

- key_bias = [num_heads, key_dim]

The size of key_bias depends on the num_heads parameter and the key_dim parameter of the MultiHeadAttention layer.

For example, if num_heads has a value of 8 and key_dim a value of 5, the size of key_bias is [num_heads = 8, key_dim = 5].

Another example, if num_heads has a value of 6 and key_dim a value of 4, the size of key_bias is [num_heads = 6, key_dim = 4].

- key_kernel = [key[2], num_heads, key_dim]

The size of key_kernel depends on the input size of key, the num_heads parameter and the key_dim parameter of the MultiHeadAttention layer. For the input size of key, it will take the value at index 2.

For example, if key has a size of [batch_size = 5, Tv = 3, dim = 2], num_heads a value of 8 and key_dim a value of 5, then the size of key_kernel is [dim = 2, num_heads = 8, key_dim = 5].

Another example, if key has a size of [batch_size = 10, Tv = 9, dim = 4], num_heads a value of 6 and key_dim a value of 5, then the size of key_kernel is [dim = 4, num_heads = 6, key_dim = 5].

- query_bias = [num_heads, key_dim]

The size of query_bias depends on the num_heads parameter and the key_dim parameter of the MultiHeadAttention layer.

For example, if num_heads has a value of 8 and key_dim a value of 5, the size of query_bias is [num_heads = 8, key_dim = 5].

Another example, if num_heads has a value of 6 and key_dim a value of 4, the size of query_bias is [num_heads = = 6, key_dim = 4].

- query_kernel = [query[2], num_heads, key_dim]

The size of query_kernel depends on the query input size, the num_heads parameter and the key_dim parameter of the MultiHeadAttention layer. For the query input size, it will take the value at index 2.

For example, if query has a size of [batch_size = 5, Tq = 3, dim = 2], num_heads a value of 8 and key_dim a value of 5, then the size of query_kernel is [dim = 2, num_heads = 8, key_dim = 5].

Another example, if query has a size of [batch_size = 10, Tq = 9, dim = 4], num_heads a value of 6 and key_dim a value of 5, then the size of query_kernel is [dim = 4, num_heads = 6, key_dim = 5].