Welcome to our Support Center

-

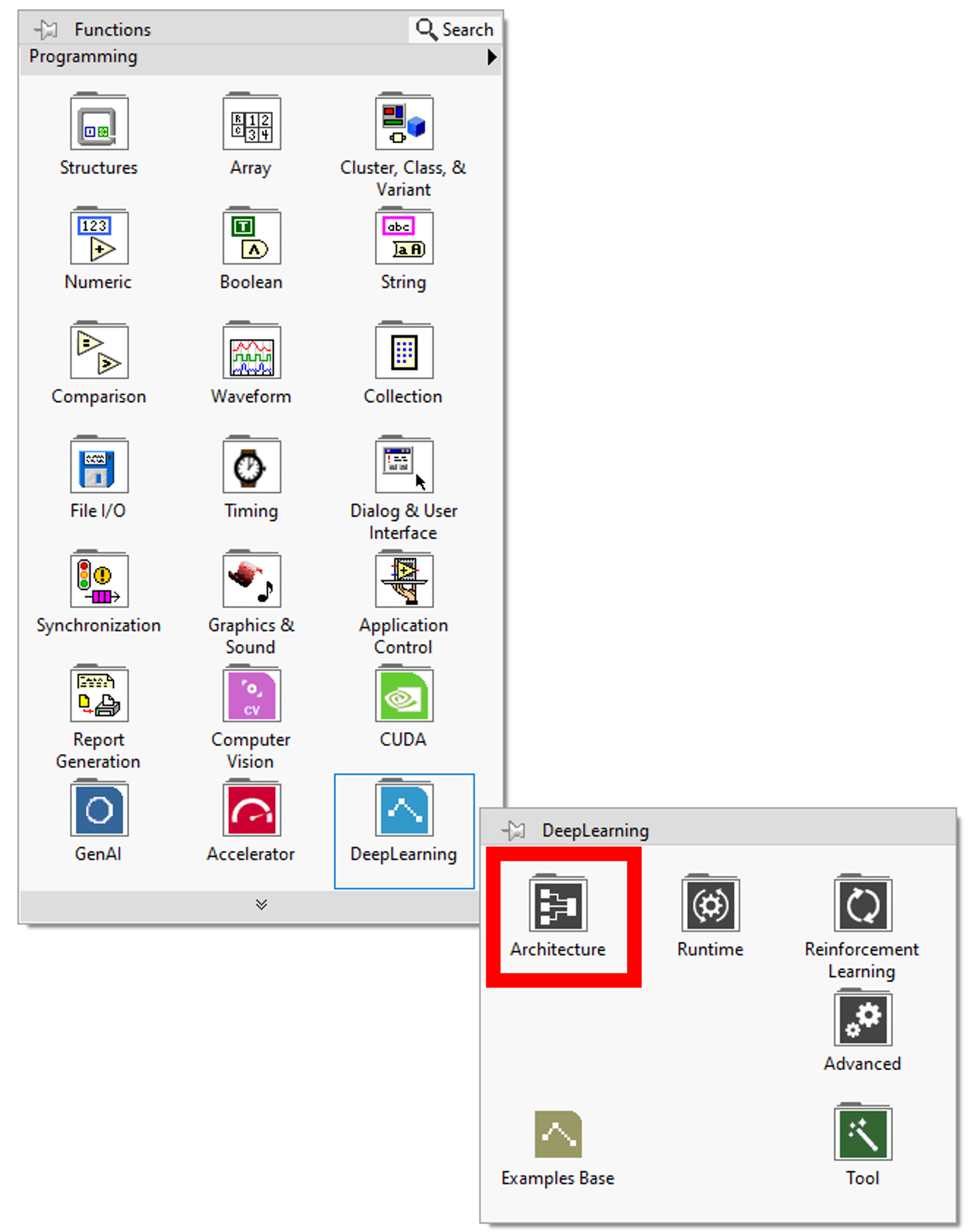

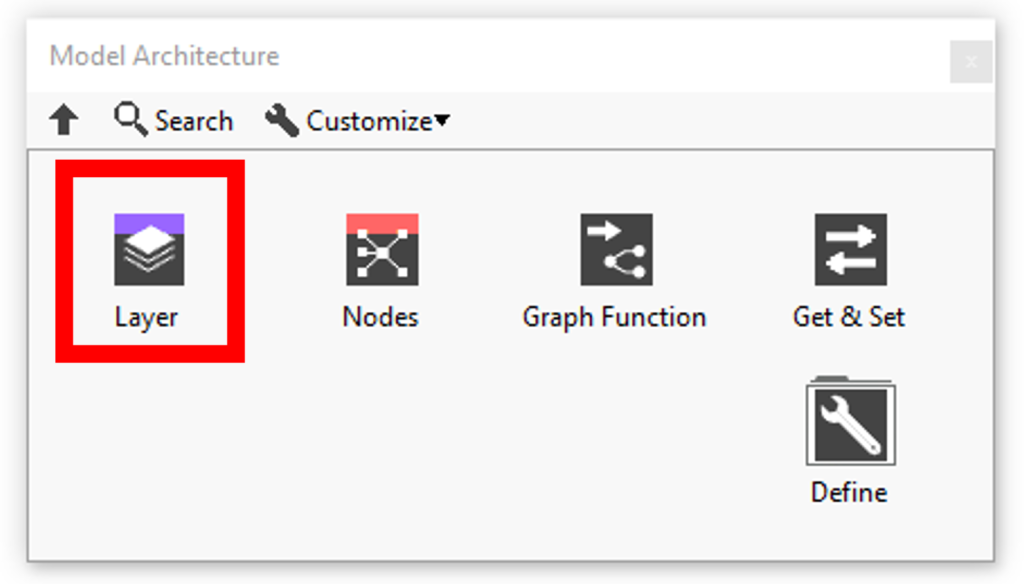

Quick start

-

API

-

-

- Resume

- Add

- AdditiveAttention

- AlphaDropout

- Attention

- Average

- AvgPool1D

- AvgPool2D

- AvgPool3D

- BatchNormalization

- Bidirectional

- Concatenate

- Conv1D

- Conv1DTranspose

- Conv2D

- Conv2DTranspose

- Conv3D

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Cropping1D

- Cropping2D

- Cropping3D

- Dense

- DepthwiseConv2D

- Dropout

- ELU

- Embedding

- Exponential

- Flatten

- GaussianDropout

- GaussianNoise

- GELU

- GlobalAvgPool1D

- GlobalAvgPool2D

- GlobalAvgPool3D

- GlobalMaxPool1D

- GlobalMaxPool2D

- GlobalMaxPool3D

- GRU

- HardSigmoid

- Input

- LayerNormalization

- LeakyReLU

- Linear

- LSTM

- MaxPool1D

- MaxPool2D

- MaxPool3D

- MultiHeadAttention

- Multiply

- Output Predict

- Output Train

- Permute3D

- PReLU

- ReLU

- Reshape

- RNN

- SELU

- SeparableConv1D

- SeparableConv2D

- Sigmoid

- SimpleRNN

- SoftMax

- SoftPlus

- SoftSign

- SpatialDropout

- Split

- Substract

- Swish

- TanH

- ThresholdedReLU

- UpSampling1D

- UpSampling2D

- UpSampling3D

- ZeroPadding1D

- ZeroPadding2D

- ZeroPadding3D

- Show All Articles (64) Collapse Articles

-

-

-

-

- Abs

- Acos

- Acosh

- ArgMax

- ArgMin

- Asin

- Asinh

- Atan

- Atanh

- AveragePool

- Bernouilli

- BitwiseNot

- BlackmanWindow

- Cast

- Ceil

- Celu

- ConcatFromSequence

- Cos

- Cosh

- DepthToSpace

- Det

- DynamicTimeWarping

- Erf

- Exp

- EyeLike

- Flatten

- Floor

- GlobalAveragePool

- GlobalLpPool

- GlobalMaxPool

- HammingWindow

- HannWindow

- HardSwish

- HardMax

- Identity

- ImageDecoder

- Inverse

- lrfft

- lslnf

- lsNaN

- Log

- LogSoftmax

- LpNormalization

- LpPool

- LRN

- MeanVarianceNormalization

- MicrosoftGelu

- Mish

- Multinomial

- MurmurHash3

- Neg

- NhwcMaxPool

- NonZero

- Not

- OptionalGetElement

- OptionalHasElement

- QuickGelu

- RandomNormalLike

- RandomUniformLike

- RawConstantOfShape

- Reciprocal

- ReduceSumInteger

- RegexFullMatch

- Rfft

- Round

- SampleOp

- SequenceLength

- Shape

- Shrink

- Sign

- Sin

- Sinh

- Size

- SpaceToDepth

- Sqrt

- StringNormalizer

- Tan

- TfldfVectorizer

- Tokenizer

- Transpose

- UnfoldTensor

- Show All Articles (66) Collapse Articles

-

-

-

- Add

- AffineGrid

- And

- BiasAdd

- BiasGelu

- BiasSoftmax

- BiasSplitGelu

- BitShift

- BitwiseAnd

- BitwiseOr

- BitwiseXor

- CastLike

- CDist

- CenterCropPad

- Clip

- Col2lm

- ComplexMul

- ComplexMulConj

- Compress

- Conv

- ConvInteger

- ConvTranspose

- ConvTransposeWithDynamicPads

- CropAndResize

- CumSum

- DeformConv

- DequantizeBFP

- DequantizeLinear

- DequantizeWithOrder

- DFT

- Div

- DynamicQuantizeMatMul

- Equal

- Expand

- ExpandDims

- FastGelu

- FusedConv

- FusedGemm

- FusedMatMul

- FusedMatMulActivation

- GatedRelativePositionBias

- Gather

- GatherElements

- GatherND

- Gemm

- GemmFastGelu

- GemmFloat8

- Greater

- GreaterOrEqual

- GreedySearch

- GridSample

- GroupNorm

- InstanceNormalization

- Less

- LessOrEqual

- LongformerAttention

- MatMul

- MatMulBnb4

- MatMulFpQ4

- MatMulInteger

- MatMulInteger16

- MatMulIntergerToFloat

- MatMulNBits

- MaxPoolWithMask

- MaxRoiPool

- MaxUnPool

- MelWeightMatrix

- MicrosoftDequantizeLinear

- MicrosoftGatherND

- MicrosoftGridSample

- MicrosoftPad

- MicrosoftQLinearConv

- MicrosoftQuantizeLinear

- MicrosoftRange

- MicrosoftTrilu

- Mod

- MoE

- Mul

- MulInteger

- NegativeLogLikelihoodLoss

- NGramRepeatBlock

- NhwcConv

- NhwcFusedConv

- NonMaxSuppression

- OneHot

- Or

- PackedAttention

- PackedMultiHeadAttention

- Pad

- Pow

- QGemm

- QLinearAdd

- QLinearAveragePool

- QLinearConcat

- QLinearConv

- QLinearGlobalAveragePool

- QLinearLeakyRelu

- QLinearMatMul

- QLinearMul

- QLinearReduceMean

- QLinearSigmoid

- QLinearSoftmax

- QLinearWhere

- QMoE

- QOrderedAttention

- QOrderedGelu

- QOrderedLayerNormalization

- QOrderedLongformerAttention

- QOrderedMatMul

- QuantizeLinear

- QuantizeWithOrder

- Range

- ReduceL1

- ReduceL2

- ReduceLogSum

- ReduceLogSumExp

- ReduceMax

- ReduceMean

- ReduceMin

- ReduceProd

- ReduceSum

- ReduceSumSquare

- RelativePositionBias

- Reshape

- Resize

- RestorePadding

- ReverseSequence

- RoiAlign

- RotaryEmbedding

- ScatterElements

- ScatterND

- SequenceAt

- SequenceErase

- SequenceInsert

- Slice

- SparseToDenseMatMul

- SplitToSequence

- Squeeze

- STFT

- StringConcat

- Sub

- Tile

- TorchEmbedding

- TransposeMatMul

- Trilu

- Unsqueeze

- Where

- WordConvEmbedding

- Xor

- Show All Articles (134) Collapse Articles

-

- Attention

- AttnLSTM

- BatchNormalization

- BiasDropout

- BifurcationDetector

- BitmaskBiasDropout

- BitmaskDropout

- DecoderAttention

- DecoderMaskedMultiHeadAttention

- DecoderMaskedSelfAttention

- Dropout

- DynamicQuantizeLSTM

- EmbedLayerNormalization

- GemmaRotaryEmbedding

- GroupQueryAttention

- GRU

- LayerNormalization

- LSTM

- MicrosoftMultiHeadAttention

- QAttention

- RemovePadding

- RNN

- Sampling

- SkipGroupNorm

- SkipLayerNormalization

- SkipSimplifiedLayerNormalization

- SoftmaxCrossEntropyLoss

- SparseAttention

- TopK

- WhisperBeamSearch

- Show All Articles (15) Collapse Articles

-

-

-

-

-

-

- Resume

- Constant

- GlorotNormal

- GlorotUniform

- HeNormal

- HeUniform

- Identity

- LecunNormal

- LecunUniform

- Ones

- Orthogonal

- RandomNormal

- RandomUnifom

- TruncatedNormal

- VarianceScaling

- Zeros

- Show All Articles (1) Collapse Articles

-

- Resume

- BinaryCrossentropy

- CategoricalCrossentropy

- CategoricalHinge

- CosineSimilarity

- Hinge

- Huber

- KLDivergence

- LogCosh

- MeanAbsoluteError

- MeanAbsolutePercentageError

- MeanSquaredError

- MeanSquaredLogarithmicError

- Poisson

- SquaredHinge

- Custom

- Show All Articles (1) Collapse Articles

-

-

-

-

-

- Dense

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- AdditiveAttention

- Attention

- MutiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- Embedding

- BatchNormalization

- LayerNormalization

- Bidirectional

- GRU

- LSTM

- SimpleRNN

- Show All Articles (12) Collapse Articles

-

- Dense

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- Embedding

- BatchNormalization

- LayerNormalization

- Bidirectional

- GRU

- LSTM

- SimpleRNN

- Show All Articles (12) Collapse Articles

-

-

- Resume

- Dense

- AdditiveAttention

- Attention

- MultiHeadAttention

- BatchNormalization

- LayerNormalization

- Bidirectional

- GRU

- LSTM

- SimpleRNN

- Conv1D

- Conv2D

- Conv3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- Embedding

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Show All Articles (13) Collapse Articles

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (15) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (15) Collapse Articles

-

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (15) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (15) Collapse Articles

-

-

- Resume

- Accuracy

- BinaryAccuracy

- BinaryCrossentropy

- BinaryIoU

- CategoricalAccuracy

- CategoricalCrossentropy

- CategoricalHinge

- CosineSimilarity

- FalseNegatives

- FalsePositives

- Hinge

- Huber

- IoU

- KLDivergence

- LogCoshError

- Mean

- MeanAbsoluteError

- MeanAbsolutePercentageError

- MeanIoU

- MeanRelativeError

- MeanSquaredError

- MeanSquaredLogarithmicError

- MeanTensor

- OneHotIoU

- OneHotMeanIoU

- Poisson

- Precision

- PrecisionAtRecall

- Recall

- RecallAtPrecision

- RootMeanSquaredError

- SensitivityAtSpecificity

- SparseCategoricalAccuracy

- SparseCategoricalCrossentropy

- SparseTopKCategoricalAccuracy

- Specificity

- SpecificityAtSensitivity

- SquaredHinge

- Sum

- TopKCategoricalAccuracy

- TrueNegatives

- TruePositives

- Show All Articles (28) Collapse Articles

-

-

Updated

Layers resume

LAYERS

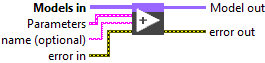

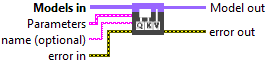

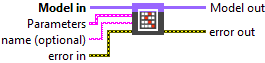

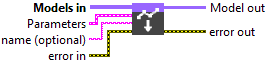

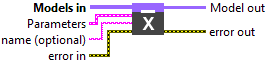

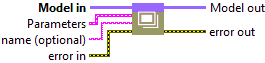

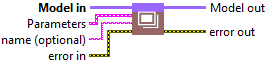

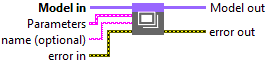

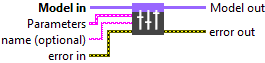

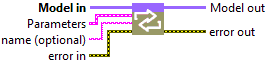

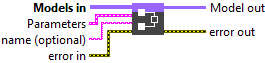

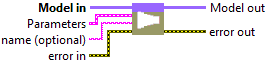

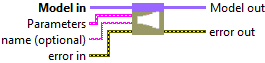

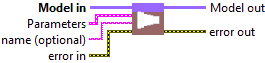

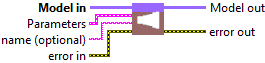

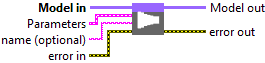

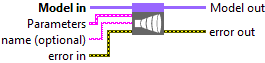

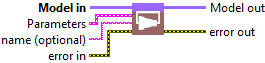

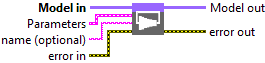

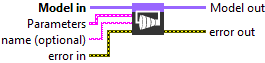

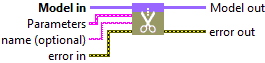

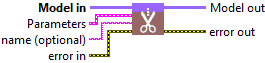

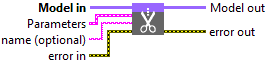

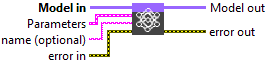

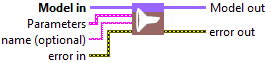

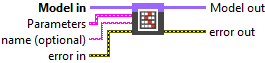

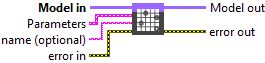

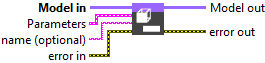

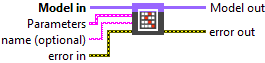

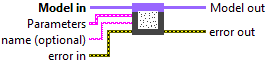

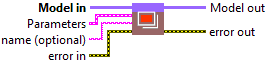

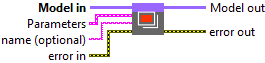

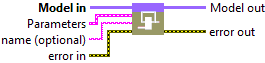

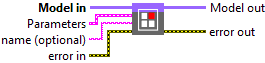

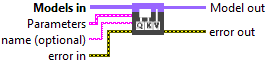

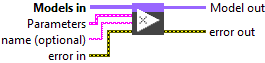

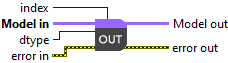

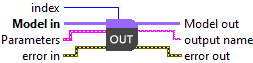

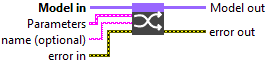

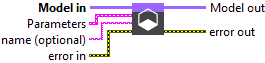

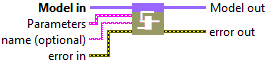

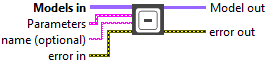

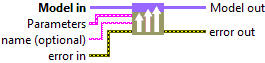

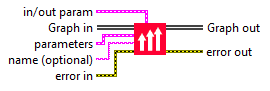

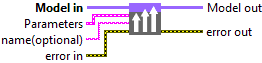

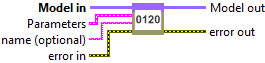

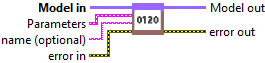

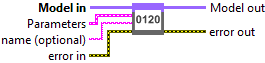

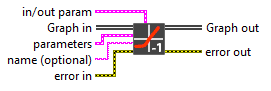

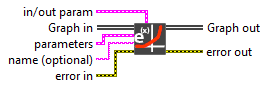

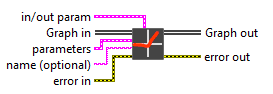

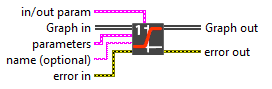

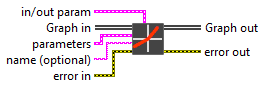

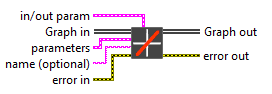

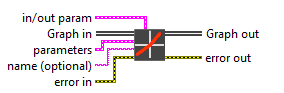

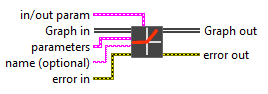

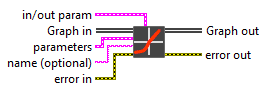

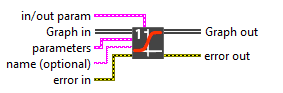

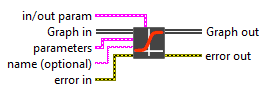

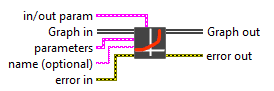

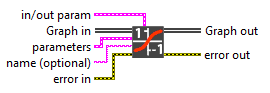

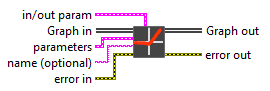

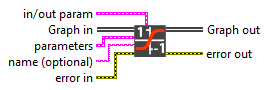

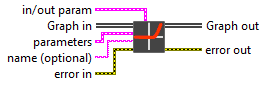

This VI “add to graph” defines a layer and links it to the other layers of the model.

In this section you’ll find a list of all add to graph layers available.

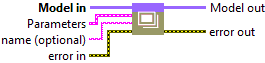

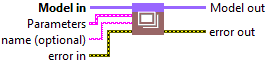

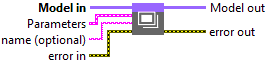

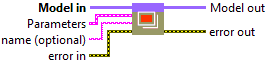

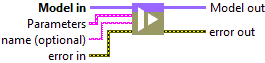

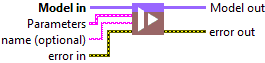

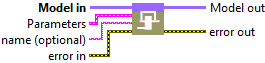

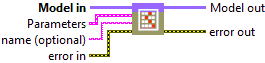

| ICONS | RESUME | |

| Add |  |

Setup and add the add layer into the model during the definition graph step. |

| AdditiveAttention |  |

Setup and add the additive attention layer into the model during the definition graph step. |

| AlphaDropout |  |

Setup and add the alpha dropout layer into the model during the definition graph step. |

| Attention |  |

Setup and add the attention layer into the model during the definition graph step. |

| Average |  |

Setup and add the average layer into the model during the definition graph step. |

| AvgPool1D |  |

Setup and add the average pooling 1D layer into the model during the definition graph step. |

| AvgPool2D |  |

Setup and add the average pooling 2D layer into the model during the definition graph step. |

| AvgPool3D |  |

Setup and add the average pooling 3D layer into the model during the definition graph step. |

| BatchNormalization |  |

Setup and add the batch normalization layer into the model during the definition graph step. |

| Bidirectional |  |

Setup and add the bidirectional layer into the model during the definition graph step. |

| Concatenate |  |

Setup and add the concatenate layer into the model during the definition graph step. |

| Conv1D |  |

Setup and add the convolution 1D layer into the model during the definition graph step. |

| Conv1DTranspose |  |

Setup and add the convolution 1D transpose layer into the model during the definition graph step. |

| Conv2D |  |

Setup and add the convolution 2D layer into the model during the definition graph step. |

| Conv2DTranspose |  |

Setup and add the convolution 2D transpose layer into the model during the definition graph step. |

| Conv3D |  |

Setup and add the convolution 3D layer into the model during the definition graph step. |

| Conv3DTranspose |  |

Setup and add the convolution 3D transpose layer into the model during the definition graph step. |

| ConvLSTM1D |  |

Setup and add the convolution lstm 1D layer into the model during the definition graph step. |

| ConvLSTM2D |  |

Setup and add the convolution lstm 2D layer into the model during the definition graph step. |

| ConvLSTM3D |  |

Setup and add the convolution lstm 3D layer into the model during the definition graph step. |

| Cropping1D |  |

Setup and add the cropping 1D layer into the model during the definition graph step. |

| Cropping2D |  |

Setup and add the cropping 2D layer into the model during the definition graph step. |

| Cropping3D |  |

Setup and add the cropping 3D layer into the model during the definition graph step. |

| Dense |  |

Setup and add the dense layer into the model during the definition graph step. |

| DepthwiseConv2D |  |

Setup and add the depthwise convolution 2D layer into the model during the definition graph step. |

| Dropout |  |

Setup and add the dropout layer into the model during the definition graph step. |

| Embedding |  |

Setup and add the embedding layer into the model during the definition graph step. |

| Flatten |  |

Setup and add the flatten layer into the model during the definition graph step. |

| GaussianDropout |  |

Setup and add the gaussian dropout layer into the model during the definition graph step. |

| GaussianNoise |  |

Setup and add the gaussian noise layer into the model during the definition graph step. |

| GlobalAvgPool1D |  |

Setup and add the global average pooling 1D layer into the model during the definition graph step. |

| GlobalAvgPool2D |  |

Setup and add the global average pooling 2D layer into the model during the definition graph step. |

| GlobalAvgPool3D |  |

Setup and add the global average pooling 3D layer into the model during the definition graph step. |

| GlobalMaxPool1D |  |

Setup and add the global max pooling 1D layer into the model during the definition graph step. |

| GlobalMaxPool2D |  |

Setup and add the global max pooling 2D layer into the model during the definition graph step. |

| GlobalMaxPool3D |  |

Setup and add the global max pooling 3D layer into the model during the definition graph step. |

| GRU |  |

Setup and add the gru layer into the model during the definition graph step. |

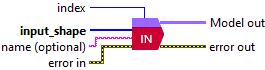

| Input |  |

Setup and add the input layer into the model during the definition graph step. |

| LayerNormalization |  |

Setup and add the layer normalization layer into the model during the definition graph step. |

| LSTM |  |

Setup and add the lstm layer into the model during the definition graph step. |

| MaxPool1D |  |

Setup and add the max pooling 1D layer into the model during the definition graph step. |

| MaxPool2D |  |

Setup and add the max pooling 2D layer into the model during the definition graph step. |

| MaxPool3D |  |

Setup and add the max pooling 3D layer into the model during the definition graph step. |

| MultiHeadAttention |  |

Setup and add the multi head attention layer into the model during the definition graph step. |

| Multiply |  |

Setup and add the multiply layer into the model during the definition graph step. |

| Output Predict |  |

Setup and add the output layer into the model during the definition graph step. |

| Output Train |  |

Setup and add the output layer into the model during the definition graph step. |

| Permute3D |  |

Setup and add the permute 3D layer into the model during the definition graph step. |

| Reshape |  |

Setup and add the reshape layer into the model during the definition graph step. |

| RNN |  |

Setup and add the rnn layer into the model during the definition graph step. |

| SeparableConv1D |  |

Setup and add the separable conv 1D layer into the model during the definition graph step. |

| SeparableConv2D |  |

Setup and add the separable conv 2D layer into the model during the definition graph step. |

| SimpleRNN |  |

Setup and add the simple rnn layer into the model during the definition graph step. |

| SpatialDropout |  |

Setup and add the spatial dropout layer into the model during the definition graph step. |

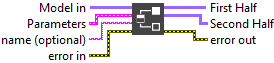

| Split |  |

Setup and add the split layer into the model during the definition graph step. |

| Substract |  |

Setup and add the substract layer into the model during the definition graph step. |

| UpSampling1D |  |

Setup and add the up sampling 1D layer into the model during the definition graph step. |

| UpSampling2D |  |

Setup and add the up sampling 2D layer into the model during the definition graph step. |

| UpSampling3D |  |

Setup and add the up sampling 3D layer into the model during the definition graph step. |

| ZeroPadding1D |  |

Setup and add the zero padding 1D layer into the model during the definition graph step. |

| ZeroPadding2D |  |

Setup and add the zero padding 2D layer into the model during the definition graph step. |

| ZeroPadding3D |  |

Setup and add the zero padding 3D layer into the model during the definition graph step. |

ACTIVATIONS

In this section you’ll find a list of all add to graph activations available.

| ICONS | RESUME | |

| ELU |  |

Setup and add elu layer into the model during the definition graph step. |

| Exponential |  |

Setup and add exponential layer into the model during the definition graph step. |

| GELU |  |

Setup and add gelu layer into the model during the definition graph step. |

| HardSigmoid |  |

Setup and add hard sigmoid layer into the model during the definition graph step. |

| LeakyReLU |  |

Setup and add leaky relu layer into the model during the definition graph step. |

| Linear |  |

Setup and add linear layer into the model during the definition graph step. |

| PReLU |  |

Setup and add prelu layer into the model during the definition graph step. |

| ReLU |  |

Setup and add relu layer into the model during the definition graph step. |

| SELU |  |

Setup and add selu layer into the model during the definition graph step. |

| Sigmoid |  |

Setup and add sigmoid layer into the model during the definition graph step. |

| SoftMax |  |

Setup and add softmax layer into the model during the definition graph step. |

| SoftPlus |  |

Setup and add softplus layer into the model during the definition graph step. |

| SoftSign |  |

Setup and add softsign layer into the model during the definition graph step. |

| Swish |  |

Setup and add swish layer into the model during the definition graph step. |

| TanH |  |

Setup and add tanh layer into the model during the definition graph step. |

| ThresholdedReLU |  |

Setup and add thresholded relu layer into the model during the definition graph step. |

Table of Contents