-

Quick start

-

API

-

-

- Resume

- Add

- AdditiveAttention

- AlphaDropout

- Attention

- Average

- AvgPool1D

- AvgPool2D

- AvgPool3D

- BatchNormalization

- Bidirectional

- Concatenate

- Conv1D

- Conv1DTranspose

- Conv2D

- Conv2DTranspose

- Conv3D

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Cropping1D

- Cropping2D

- Cropping3D

- Dense

- DepthwiseConv2D

- Dropout

- ELU

- Embedding

- Exponential

- Flatten

- GaussianDropout

- GaussianNoise

- GELU

- GlobalAvgPool1D

- GlobalAvgPool2D

- GlobalAvgPool3D

- GlobalMaxPool1D

- GlobalMaxPool2D

- GlobalMaxPool3D

- GRU

- HardSigmoid

- Input

- LayerNormalization

- LeakyReLU

- Linear

- LSTM

- MaxPool1D

- MaxPool2D

- MaxPool3D

- MultiHeadAttention

- Multiply

- Output Predict

- Output Train

- Permute3D

- PReLU

- ReLU

- Reshape

- RNN

- SELU

- SeparableConv1D

- SeparableConv2D

- Sigmoid

- SimpleRNN

- SoftMax

- SoftPlus

- SoftSign

- SpatialDropout

- Split

- Substract

- Swish

- TanH

- ThresholdedReLU

- UpSampling1D

- UpSampling2D

- UpSampling3D

- ZeroPadding1D

- ZeroPadding2D

- ZeroPadding3D

- Show All Articles (64) Collapse Articles

-

-

-

-

- Abs

- Acos

- Acosh

- ArgMax

- ArgMin

- Asin

- Asinh

- Atan

- Atanh

- AveragePool

- Bernouilli

- BitwiseNot

- BlackmanWindow

- Cast

- Ceil

- Celu

- ConcatFromSequence

- Cos

- Cosh

- DepthToSpace

- Det

- DynamicTimeWarping

- Erf

- Exp

- EyeLike

- Flatten

- Floor

- GlobalAveragePool

- GlobalLpPool

- GlobalMaxPool

- HammingWindow

- HannWindow

- HardSwish

- HardMax

- Identity

- ImageDecoder

- Inverse

- lrfft

- lslnf

- lsNaN

- Log

- LogSoftmax

- LpNormalization

- LpPool

- LRN

- MeanVarianceNormalization

- MicrosoftGelu

- Mish

- Multinomial

- MurmurHash3

- Neg

- NhwcMaxPool

- NonZero

- Not

- OptionalGetElement

- OptionalHasElement

- QuickGelu

- RandomNormalLike

- RandomUniformLike

- RawConstantOfShape

- Reciprocal

- ReduceSumInteger

- RegexFullMatch

- Rfft

- Round

- SampleOp

- SequenceLength

- Shape

- Shrink

- Sign

- Sin

- Sinh

- Size

- SpaceToDepth

- Sqrt

- StringNormalizer

- Tan

- TfldfVectorizer

- Tokenizer

- Transpose

- UnfoldTensor

- Show All Articles (66) Collapse Articles

-

-

-

- Add

- AffineGrid

- And

- BiasAdd

- BiasGelu

- BiasSoftmax

- BiasSplitGelu

- BitShift

- BitwiseAnd

- BitwiseOr

- BitwiseXor

- CastLike

- CDist

- CenterCropPad

- Clip

- Col2lm

- ComplexMul

- ComplexMulConj

- Compress

- Conv

- ConvInteger

- ConvTranspose

- ConvTransposeWithDynamicPads

- CropAndResize

- CumSum

- DeformConv

- DequantizeBFP

- DequantizeLinear

- DequantizeWithOrder

- DFT

- Div

- DynamicQuantizeMatMul

- Equal

- Expand

- ExpandDims

- FastGelu

- FusedConv

- FusedGemm

- FusedMatMul

- FusedMatMulActivation

- GatedRelativePositionBias

- Gather

- GatherElements

- GatherND

- Gemm

- GemmFastGelu

- GemmFloat8

- Greater

- GreaterOrEqual

- GreedySearch

- GridSample

- GroupNorm

- InstanceNormalization

- Less

- LessOrEqual

- LongformerAttention

- MatMul

- MatMulBnb4

- MatMulFpQ4

- MatMulInteger

- MatMulInteger16

- MatMulIntergerToFloat

- MatMulNBits

- MaxPoolWithMask

- MaxRoiPool

- MaxUnPool

- MelWeightMatrix

- MicrosoftDequantizeLinear

- MicrosoftGatherND

- MicrosoftGridSample

- MicrosoftPad

- MicrosoftQLinearConv

- MicrosoftQuantizeLinear

- MicrosoftRange

- MicrosoftTrilu

- Mod

- MoE

- Mul

- MulInteger

- NegativeLogLikelihoodLoss

- NGramRepeatBlock

- NhwcConv

- NhwcFusedConv

- NonMaxSuppression

- OneHot

- Or

- PackedAttention

- PackedMultiHeadAttention

- Pad

- Pow

- QGemm

- QLinearAdd

- QLinearAveragePool

- QLinearConcat

- QLinearConv

- QLinearGlobalAveragePool

- QLinearLeakyRelu

- QLinearMatMul

- QLinearMul

- QLinearReduceMean

- QLinearSigmoid

- QLinearSoftmax

- QLinearWhere

- QMoE

- QOrderedAttention

- QOrderedGelu

- QOrderedLayerNormalization

- QOrderedLongformerAttention

- QOrderedMatMul

- QuantizeLinear

- QuantizeWithOrder

- Range

- ReduceL1

- ReduceL2

- ReduceLogSum

- ReduceLogSumExp

- ReduceMax

- ReduceMean

- ReduceMin

- ReduceProd

- ReduceSum

- ReduceSumSquare

- RelativePositionBias

- Reshape

- Resize

- RestorePadding

- ReverseSequence

- RoiAlign

- RotaryEmbedding

- ScatterElements

- ScatterND

- SequenceAt

- SequenceErase

- SequenceInsert

- Slice

- SparseToDenseMatMul

- SplitToSequence

- Squeeze

- STFT

- StringConcat

- Sub

- Tile

- TorchEmbedding

- TransposeMatMul

- Trilu

- Unsqueeze

- Where

- WordConvEmbedding

- Xor

- Show All Articles (134) Collapse Articles

-

- Attention

- AttnLSTM

- BatchNormalization

- BiasDropout

- BifurcationDetector

- BitmaskBiasDropout

- BitmaskDropout

- DecoderAttention

- DecoderMaskedMultiHeadAttention

- DecoderMaskedSelfAttention

- Dropout

- DynamicQuantizeLSTM

- EmbedLayerNormalization

- GemmaRotaryEmbedding

- GroupQueryAttention

- GRU

- LayerNormalization

- LSTM

- MicrosoftMultiHeadAttention

- QAttention

- RemovePadding

- RNN

- Sampling

- SkipGroupNorm

- SkipLayerNormalization

- SkipSimplifiedLayerNormalization

- SoftmaxCrossEntropyLoss

- SparseAttention

- TopK

- WhisperBeamSearch

- Show All Articles (15) Collapse Articles

-

-

-

-

-

-

- Resume

- Constant

- GlorotNormal

- GlorotUniform

- HeNormal

- HeUniform

- Identity

- LecunNormal

- LecunUniform

- Ones

- Orthogonal

- RandomNormal

- RandomUnifom

- TruncatedNormal

- VarianceScaling

- Zeros

- Show All Articles (1) Collapse Articles

-

- Resume

- BinaryCrossentropy

- CategoricalCrossentropy

- CategoricalHinge

- CosineSimilarity

- Hinge

- Huber

- KLDivergence

- LogCosh

- MeanAbsoluteError

- MeanAbsolutePercentageError

- MeanSquaredError

- MeanSquaredLogarithmicError

- Poisson

- SquaredHinge

- Custom

- Show All Articles (1) Collapse Articles

-

-

-

-

-

- Dense

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- AdditiveAttention

- Attention

- MutiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- Embedding

- BatchNormalization

- LayerNormalization

- Bidirectional

- GRU

- LSTM

- SimpleRNN

- Show All Articles (12) Collapse Articles

-

- Dense

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- Embedding

- BatchNormalization

- LayerNormalization

- Bidirectional

- GRU

- LSTM

- SimpleRNN

- Show All Articles (12) Collapse Articles

-

-

- Resume

- Dense

- AdditiveAttention

- Attention

- MultiHeadAttention

- BatchNormalization

- LayerNormalization

- Bidirectional

- GRU

- LSTM

- SimpleRNN

- Conv1D

- Conv2D

- Conv3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- Embedding

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Show All Articles (13) Collapse Articles

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (15) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (15) Collapse Articles

-

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (15) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (15) Collapse Articles

-

-

- Resume

- Accuracy

- BinaryAccuracy

- BinaryCrossentropy

- BinaryIoU

- CategoricalAccuracy

- CategoricalCrossentropy

- CategoricalHinge

- CosineSimilarity

- FalseNegatives

- FalsePositives

- Hinge

- Huber

- IoU

- KLDivergence

- LogCoshError

- Mean

- MeanAbsoluteError

- MeanAbsolutePercentageError

- MeanIoU

- MeanRelativeError

- MeanSquaredError

- MeanSquaredLogarithmicError

- MeanTensor

- OneHotIoU

- OneHotMeanIoU

- Poisson

- Precision

- PrecisionAtRecall

- Recall

- RecallAtPrecision

- RootMeanSquaredError

- SensitivityAtSpecificity

- SparseCategoricalAccuracy

- SparseCategoricalCrossentropy

- SparseTopKCategoricalAccuracy

- Specificity

- SpecificityAtSensitivity

- SquaredHinge

- Sum

- TopKCategoricalAccuracy

- TrueNegatives

- TruePositives

- Show All Articles (28) Collapse Articles

-

-

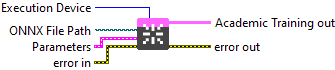

Create Academic Training Session From File

Description

Initialize an Academic Training Session from an .onnx file. Type : polymorphic.

Input parameters

![]() Execution Device : enum, selects the hardware device on which the model will run.

Execution Device : enum, selects the hardware device on which the model will run.![]() ONNX File Path : path, is the path to the model file.

ONNX File Path : path, is the path to the model file.

![]() Parameters : cluster,

Parameters : cluster,

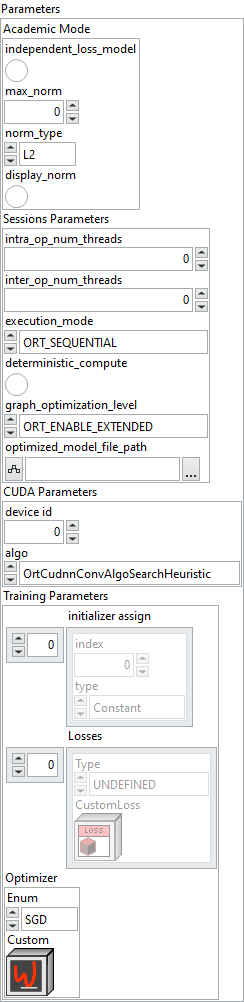

![]() Academic Mode : cluster

Academic Mode : cluster

![]() independent_loss_model : boolean, if true, splits the model into 3 stages (forward, loss, backward) instead of 2 (forward+loss, backward).

independent_loss_model : boolean, if true, splits the model into 3 stages (forward, loss, backward) instead of 2 (forward+loss, backward).![]() max_norm : float, maximum global gradient norm (enables clipping if > 0).

max_norm : float, maximum global gradient norm (enables clipping if > 0).![]() norm_type : enum, type of norm used to compute

norm_type : enum, type of norm used to compute grad_norm (commonly 1 = L1, 2 = L2).![]() display_norm : boolean, adds

display_norm : boolean, adds grad_norm as a model output if set to 1.

![]() Sessions Parameters : cluster

Sessions Parameters : cluster

![]() intra_op_num_threads : integer, number of threads used within each operator to parallelize computations. If the value is 0, ONNX Runtime automatically uses the number of physical CPU cores.

intra_op_num_threads : integer, number of threads used within each operator to parallelize computations. If the value is 0, ONNX Runtime automatically uses the number of physical CPU cores.![]() inter_op_num_threads : integer, number of threads used between operators, to execute multiple graph nodes in parallel. If set to 0, this parameter is ignored when

inter_op_num_threads : integer, number of threads used between operators, to execute multiple graph nodes in parallel. If set to 0, this parameter is ignored when execution_mode is ORT_SEQUENTIAL. In ORT_PARALLEL mode, 0 means ORT automatically selects a suitable number of threads (usually equal to the number of cores).![]() execution_mode : enum, controls whether the graph executes nodes one after another or allows parallel execution when possible.

execution_mode : enum, controls whether the graph executes nodes one after another or allows parallel execution when possible. ORT_SEQUENTIAL runs nodes in order, ORT_PARALLEL runs them concurrently.![]() deterministic_compute : boolean, forces deterministic execution, meaning results will always be identical for the same inputs.

deterministic_compute : boolean, forces deterministic execution, meaning results will always be identical for the same inputs.![]() graph_optimization_level : enum, defines how much ONNX Runtime optimizes the computation graph before running the model.

graph_optimization_level : enum, defines how much ONNX Runtime optimizes the computation graph before running the model.![]() optimized_model_file_path : path, file path to save the optimized model after graph analysis.

optimized_model_file_path : path, file path to save the optimized model after graph analysis.

![]() CUDA Parameters : cluster

CUDA Parameters : cluster

![]() device id : integer, selects which GPU to use (0 = first GPU).

device id : integer, selects which GPU to use (0 = first GPU).![]() algo : enum, controls the algorithm used for cuDNN convolutions.

algo : enum, controls the algorithm used for cuDNN convolutions.

![]() Training Parameters : cluster

Training Parameters : cluster

![]() initializer assign : array, alows you to define the status of each initializer (weight, bias, etc.) in the model.

initializer assign : array, alows you to define the status of each initializer (weight, bias, etc.) in the model.

![]() index : integer, identifies the initializer in the list.

index : integer, identifies the initializer in the list.![]() type : enum, defines its status.

type : enum, defines its status.

-

-

-

-

-

- Constant : fixed value, not modified during training.

- Frozen : value included in the model but fixed, not updated.

- Training : value optimised during training.

-

-

-

-

![]() Losses : array, configures the loss function for each model output.

Losses : array, configures the loss function for each model output.

![]() Type : enum, an enumeration indicating the loss type (e.g., MSE, CrossEntropy, etc.). If

Type : enum, an enumeration indicating the loss type (e.g., MSE, CrossEntropy, etc.). If enum is set to CustomLoss, the custom class on the right will be used as the loss function. Otherwise, the selected loss will be applied with its default configuration.![]() CustomLoss : object, a custom loss class instance.

CustomLoss : object, a custom loss class instance.

![]() Optimizer : cluster, defines the optimisation algorithm for updating weights.

Optimizer : cluster, defines the optimisation algorithm for updating weights.

![]() Enum : enum, choice of standard optimizers (SGD, Adam, etc.).

Enum : enum, choice of standard optimizers (SGD, Adam, etc.).![]() Custom : object, a custom optimizer class instance.

Custom : object, a custom optimizer class instance.

Output parameters

![]() Academic Training out : object, academic training session.

Academic Training out : object, academic training session.