activation

![]() activation : enum, activation function to use.

activation : enum, activation function to use.

Exponential Linear Unit (ELU)

The Exponential Linear Unit hyperparameter alpha controls the value to which an ELU saturates for negative net inputs. ELUs diminish the vanishing gradient effect.

ELUs have negative values which pushes the mean of the activations closer to zero. Mean activations that are closer to zero enable faster learning as they bring the gradient closer to the natural gradient. ELUs saturate to a negative value when the argument gets smaller. Saturation means a small derivative which decreases the variation and the information that is propagated to the next layer.

Gaussian Error Linear Unit (GELU)

Gaussian error linear unit (GELU) computes x * P(X <= x), where P(X) ~ N(0, 1). The (GELU) non linearity weights inputs by their value, rather than gates inputs by their sign as in ReLU.

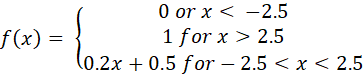

Hard sigmoid

A faster approximation of the sigmoid activation. Piecewise linear approximation of the sigmoid function.

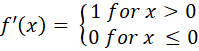

Leaky version of a Rectified Linear Unit.

With default values, this returns the standard ReLU activation: max(x, 0), the element-wise maximum of 0 and the input tensor.

Modifying default parameters allows you to use non-zero thresholds, change the max value of the activation, and to use a non-zero multiple of the input for values below the threshold.

Rectified Linear Unit (ReLU)

With default values, this returns the standard ReLU activation: max(x,0), the element-wise maximum of 0 and the input tensor.

Modifying default parameters allows you to use non-zero thresholds, change the max value of the activation, and to use a non-zero multiple of the input for values below the threshold.

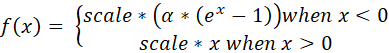

Scaled Exponential Linear Unit (SELU)

The Scaled Exponential Linear Unit (SELU) activation function is defined as:

- if x > 0: return scale * x

- if x < 0: return scale * alpha * (exp(x) – 1)

where alpha and scale are pre-defined constants (alpha = 1.67326324 and scale = 1.05070098).

Basically, the SELU activation function multiplies scale (> 1) with the output of the elu activation to ensure a slope larger than one for positive inputs.

The values of alpha and scale are chosen so that the mean and variance of the inputs are preserved between two consecutive layers as long as the weights are initialized correctly and the number of input units is “large enough”.

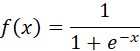

Sigmoid

Applies the sigmoid activation function. For small values (<-5), sigmoid returns a value close to zero, and for large values (>5) the result of the function gets close to 1.

Sigmoid is equivalent to a 2-element Softmax, where the second element is assumed to be zero. The sigmoid function always returns a value between 0 and 1.

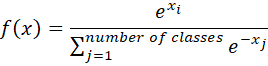

Softmax

The elements of the output vector are in range (0, 1) and sum to 1. Each vector is handled independently. The axis argument sets which axis of the input the function is applied along. Softmax is often used as the activation for the last layer of a classification network because the result could be interpreted as a probability distribution. The input values in are the log-odds of the resulting probability.

Swish

The elements of the output vector are in range (0, 1) and sum to 1. Each vector is handled independently. The axis argument sets which axis of the input the function is applied along. Softmax is often used as the activation for the last layer of a classification network because the result could be interpreted as a probability distribution. The input values in are the log-odds of the resulting probability.

This parameter is used in add_to_graph an define VIs of the Conv1D, Conv1DTranspose, Conv2D, Conv2DTranspose, Conv3D, Conv3DTranspose, Dense, DepthwiseConv2D, GRU, LSTM, SeparableConv1D, SeparableConv2D, SimpleRNN, ConvLSTM1DCell, ConvLSTM2DCell, ConvLSTM3DCell, GRUCell, LSTMCell, SimpleRNNCell layers.